OX HE Tutorial 1M: Difference between revisions

No edit summary |

No edit summary |

||

| Line 60: | Line 60: | ||

If one machine fails, the other machine will take over all functionality. | If one machine fails, the other machine will take over all functionality. | ||

[[OXLoadBalancingClustering_Database|Database setup for clustered environments]] | |||

== Install and configure OX on both servers == | == Install and configure OX on both servers == | ||

OX will be installed on minimum two servers. It will be configured to '''write''' to the '''first''' MySQL database and to '''read''' from the '''second''' MySQL database in one cluster. This will distribute the load during normal operation as smooth as possible. During FailOver the IP address of the failed MySQL server will be taken over to the working server, the system stays operable. | OX will be installed on minimum two servers. It will be configured to '''write''' to the '''first''' MySQL database and to '''read''' from the '''second''' MySQL database in one cluster. This will distribute the load during normal operation as smooth as possible. During FailOver the IP address of the failed MySQL server will be taken over to the working server, the system stays operable. | ||

[[OXLoadBalancingClustering_OXConfiguration|Open-Xchange setup and configuration for clustered environments]] | |||

The NFS server will be mounted on all machines and registered as filestore. | The NFS server will be mounted on all machines and registered as filestore. | ||

[[OXLoadBalancingClustering_Filestore|Filestore setup for clustered environments]] | |||

{{OXLoadBalancingClustering_SessionLoadbalancing}} | {{OXLoadBalancingClustering_SessionLoadbalancing}} | ||

Revision as of 11:10, 3 March 2011

Tutorial: High Available OX HE Setup for up to 1 Milion users

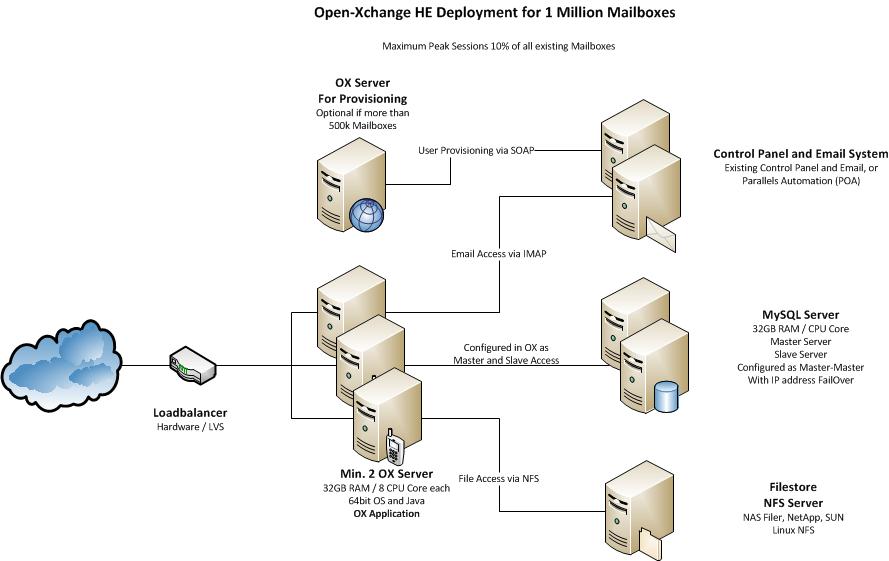

This article describes what you need for a typical OX HE Setup for up to 1.000.000 Users, which is fully clustered, high available and scaling very flexible.

It contains everything you need to:

- Understand the design of the OX HE setup including additional services

- Install the whole system based on the relevant articles

- Find pointers to the next steps of integration

System Design

The system is designed to provide maximum functionality and availability with a minimum of necessary hardware. If the services on one OX server fail, this is transparently handled by the load balancer. If one MySQL server fails, it is sufficient to take over the IP address on the other MySQL server in the cluster to stay fully in operation.

Core Components for OX HE

- Minimum two (recommended three) OX HE servers (HW recommendation: 32GB RAM / 8 cores each)

- Minimum one MySQL cluster with two servers in Master-Master configuration (HW recommendation: 32GB RAM / 8 cores each)

- NFS Server to store documents and files

- Recommended for more than 500.000 mailboxes: one OX HE server dedicated for user provisioning (HW recommendation: 16GB RAM / 4 cores each)

Infrastructure Components not delivered by OX

- An email system providing IMAP and SMTP

- A control panel for creation and administration of users

- A Load Balancer in front of the OX servers (optional, recommended)

Overview Installation Steps

To deploy the described OX setup, the following steps need to be done.

Mandatory Steps

- Initialize and configure MySQL database servers

- Install and configure OX on all servers

Steps depending on your environment

- Implement Load Balancer

- Connect Control Panel

- Connect Email System

Recommended Optional Next Steps

- Automated Frontend Tests

- Upsell Plugin

- Mobile Autoconfiguration

- Automatic FailOver

- Branding

Mandatory Installation Steps - Instructions & Recommendations

The following steps need to be done in every case to get OX up and running:

Initialize and configure MySQL database on both servers

MySQL will be configured as Master-Master configuration to ensure data consistency on both servers. If one machine fails, the other machine will take over all functionality.

Database setup for clustered environments

Install and configure OX on both servers

OX will be installed on minimum two servers. It will be configured to write to the first MySQL database and to read from the second MySQL database in one cluster. This will distribute the load during normal operation as smooth as possible. During FailOver the IP address of the failed MySQL server will be taken over to the working server, the system stays operable.

Open-Xchange setup and configuration for clustered environments

The NFS server will be mounted on all machines and registered as filestore.

Filestore setup for clustered environments

Session load balancing

Since configuration of system services for the corresponding operating system is already described in the general installation guides, this guide will focus on the specialties when creating a distributed setup. Please refer to the installation guides for configuration that is not mentioned in this guide.

The web server on this setup is a pure frontend server. This means it takes and responds to requests sent by a client but it does not contain any groupware logic. All requests are forwarded to the Open-Xchange Servers through the AJP13 protocol. The configuration will allow round-robin session load balancing, basically both Open-Xchange servers are configured as backends for answering requests with an 50:50 probability of being chosen. Once a new session is created, that session is bound to the groupware server it has been created.

For the web server we only need a very small set of packages, basically only packages that starts with open-xchange-gui where most of additional packages are languagepacks or plugins. Add the Open-Xchange software repository to the package manager configuration first. Then install the open-xchange-gui package to the web server.

$ apt-get install open-xchange-configjump-generic-gui \ open-xchange-gui open-xchange-gui-wizard-plugin-gui \ open-xchange-online-help-de \ open-xchange-online-help-en open-xchange-online-help-fr

This will install the Open-Xchange user interface, Apache 2 and several services as dependency. The Apache module proxy_ajp will handle all the communication with the Open-Xchange Servers. Its configuration also contains the setup of the session balancing. What it basically does is defining two backend nodes and forwarding servlet paths to them based on the loadfactor. This setting can be customized in case the backend servers are not equal in terms of performance. The route property is important, it specifies a unique ID of a backend server and will be used when setting up Open-Xchange Servers later. Please see the Apache mod_proxy_ajp documentation for more details.

$ vim /etc/apache2/conf.d/proxy_ajp.conf

<Location /servlet/axis2/services>

# restrict access to the soap provisioning API

Order Deny,Allow

Deny from all

Allow from 127.0.0.1

# you might add more ip addresses / networks here

# Allow from 192.168 10 172.16

</Location>

<IfModule mod_proxy_ajp.c>

ProxyRequests Off

<Proxy balancer://oxcluster>

Order deny,allow

Allow from all

# multiple server setups need to have the hostname inserted instead localhost

BalancerMember ajp://10.20.30.213:8009 timeout=100 smax=0 ttl=60 retry=60 loadfactor=50 route=OX1

BalancerMember ajp://10.20.30.215:8009 timeout=100 smax=0 ttl=60 retry=60 loadfactor=50 route=OX2

ProxySet stickysession=JSESSIONID

</Proxy>

<Proxy /ajax>

ProxyPass balancer://oxcluster/ajax

</Proxy>

<Proxy /servlet>

ProxyPass balancer://oxcluster/servlet

</Proxy>

<Proxy /infostore>

ProxyPass balancer://oxcluster/infostore

</Proxy>

<Proxy /publications>

ProxyPass balancer://oxcluster/publications

</Proxy>

<Proxy /Microsoft-Server-ActiveSync>

ProxyPass balancer://oxcluster/Microsoft-Server-ActiveSync

</Proxy>

<Proxy /usm-json>

ProxyPass balancer://oxcluster/usm-json

</Proxy>

</IfModule>

Restart the Apache 2 web server and check if it is possible to connect with a browser. By default, this configuration allows plain HTTP access. In order to offer privacy to the customer the connection must be secured by a HTTPS connection based on a valid certificate. It is also recommended to set a redirect for all plain HTTP connections to use HTTPS.

Add some required apache modules to the web server. See the general installation guides for more information about configuration of expires and deflate.

$ a2enmod proxy && a2enmod proxy_ajp && a2enmod proxy_balancer && a2enmod expires && a2enmod deflate && a2enmod headers

Restart the Apache web server after applying all configuration changes.

$ /etc/init.d/apache2 restart

Configuring Open-Xchange Server

Install all relevant Open-Xchange Server packages to both groupware nodes after adding the Open-Xchange software repository to your package manages configuration. Corresponding installation instructions for your distribution can be found here:

- Download and Installation Guide for Debian GNU/Linux 10.0 (Buster)

- Download and Installation Guide for Debian GNU/Linux 11.0 (Bullseye)

- Download and Installation Guide for RedHat Enterprise Linux 7

- Download and Installation Guide for CentOS 7

It's also possible to install backend and frontend components on each node. The difference is that a backend only on each node demands separate machines which the fronend in front of the backend nodes, while you only need a load balancer in front of the nodes if you install the backend and the frontend on each node.

Create the configdb database at the MySQL Master. This step does only need to be performed on one of the Open-Xchange Server nodes.

$ /opt/open-xchange/sbin/initconfigdb --configdb-user=openexchange --configdb-pass=secret --configdb-host=10.20.30.217

Setup the Open-Xchange Server configuration. This step needs to be performed on 'both' groupware nodes. Note that the --jkroute parameter must equal the route parameter at the web servers proxy_ajp load balancing configuration of the specific server. Node 1:

$ /opt/open-xchange/sbin/oxinstaller --servername=oxserver --configdb-readhost=10.20.30.219 --configdb-writehost=10.20.30.217 --configdb-user=openexchange --master-pass=secret --configdb-pass=secret --jkroute=OX1 --ajp-bind-port=*

Node 2:

$ /opt/open-xchange/sbin/oxinstaller --servername=oxserver --configdb-readhost=10.20.30.219 --configdb-writehost=10.20.30.217 --configdb-user=openexchange --master-pass=secret --configdb-pass=secret --jkroute=OX2 --ajp-bind-port=*

Startup the Open-Xchange Daemon on one of the nodes. Wait some seconds until it is started completely.

$ systemctl start open-xchange

Now register the Open-Xchange Server at the database. Note that a server is a whole cluster in this case. This step does only need to be performed on one of the Open-Xchange Server nodes.

$ /opt/open-xchange/sbin/registerserver -n oxserver -A oxadminmaster -P secret

Register the filestorage. This step does only need to be performed on one of the Open-Xchange Server nodes. Note that the NFS export must be mounted to the same path on both groupware nodes.

$ /opt/open-xchange/sbin/registerfilestore -A oxadminmaster -P secret -t file:///var/opt/filestore

Now register the MySQL Master database in configdb. This step does only need to be performed on one of the Open-Xchange Server nodes.

$ /opt/open-xchange/sbin/registerdatabase -A oxadminmaster -P secret --name oxdatabase --hostname 10.20.30.217 --dbuser openexchange --dbpasswd secret --master true database 4 registered

Check the returned database ID which is 4 in this case. This value is required to register the MySQL Slave database in configdb. This step does only need to be performed on one of the Open-Xchange Server nodes.

$ /opt/open-xchange/sbin/registerdatabase -A oxadminmaster -P secret --name oxdatabase_slave --hostname 10.20.30.219 --dbuser openexchange --dbpasswd secret --master false --masterid=4

Now start Open-Xchange Server on both groupware nodes.

$ systemctl stop open-xchange $ systemctl start open-xchange

Create a new context and a testuser

$ /opt/open-xchange/sbin/createcontext -A oxadminmaster -P secret -c 1 -u oxadmin -d "Context Admin" -g Admin -s User -p secret -L defaultcontext -e oxadmin@example.com -q 1024 --access-combination-name=all $ /opt/open-xchange/sbin/createuser -c 1 -A oxadmin -P secret -u testuser -d "Test User" -g Test -s User -p secret -e testuser@example.com

Test Session load balancing

Apache is configured to use a 50:50 balancing between both Open-Xchange Servers. Now that they are up and running its time to check if this balancing works. This can be done by simply watching the Open-Xchange Server log files while a user logs in. Execute tail to the open-xchange.log.0 file on both servers. Then login with the testuser, one of the servers log file should show something like

$ tail -fn200 /var/log/open-xchange/open-xchange.log.0 [...] INFO: Session created. ID: 31060fc80b9e44d38148ef4d5d19963d, Context: 1, User: 3

Then logout and login again. This time, the session should be created on the other server. On the client side, the JSESSIONID cookie at the browser shows evidence on which server the user has logged in by the trailing ".OX-" identifier. This identifier is set by Open-Xchange Server based on its AJP_JVM_ROUTE attribute.

Network configuration

Open-Xchange Server uses multicast discovery to find other nodes. Once this discovery has been successful, the groupware nodes will establish TCP connections for cache communication.

Configure a multicast address for the servers' network. This needs to be done on all groupware nodes.

$ vim /etc/network/interfaces

[...]

iface eth0 inet static

[...]

post-up route add -net 224.0.0.0/8 dev eth0

Check the Open-Xchange Servers cache configuration files /opt/open-xchange/etc/groupware/cache.ccf and /opt/open-xchange/etc/admindaemon/cache.ccf on all groupware nodes. Only the very last section is interesting for distributed caching (jcs.auxiliary.*) Make sure the TCPServers attribute is commented out and the UDPDiscovery settings are active. Also check the cache configuration for /opt/open-xchange/etc/groupware/sessioncache.ccf

# jcs.auxiliary.LTCP.attributes.TcpServers=127.0.0.1:57461 jcs.auxiliary.LTCP.attributes.TcpListenerPort=57462 jcs.auxiliary.LTCP.attributes.UdpDiscoveryAddr=224.0.0.1 jcs.auxiliary.LTCP.attributes.UdpDiscoveryPort=6780 jcs.auxiliary.LTCP.attributes.UdpDiscoveryEnabled=true

These settings configure Open-Xchange Server to discover other nodes through the multicast address 224.0.0.1 and UDP port 6780. Note that the property TcpListenerPort differs at the groupware and admindaemon configuration file. This is required to avoid socket conflicts, they define the TCP port that listens for incoming connections by other groupware nodes.

Restart the networking to enable the new multicast address on both groupware nodes. Also restart the Open-Xchange Server processes on all nodes.

$ /etc/init.d/networking restart $ /etc/init.d/open-xchange restart

Test the network settings

The new routing information for the multicast network should be available when printing the routing table.

$ route -n [...] 224.0.0.0 0.0.0.0 255.0.0.0 U 0 0 0 eth0

TCP connections that are created after the UDP multicast discovery are shown with netstat.

$netstat -tlpa | grep java | grep ESTABLISHED Proto Recv-Q Send-Q Local Address Foreign Address State tcp6 0 0 oxgw01:49103 oxgw02:57461 ESTABLISHED 3706/java tcp6 0 0 oxgw01:37912 oxgw02:57462 ESTABLISHED 3706/java tcp6 0 0 oxgw01:58849 oxgw02:49302 ESTABLISHED 3706/java tcp6 0 0 oxgw01:57462 oxgw02:46054 ESTABLISHED 3706/java tcp6 0 0 oxgw01:57462 oxgw01:41904 ESTABLISHED 3706/java tcp6 0 0 oxgw01:48628 oxgw02:57461 ESTABLISHED 3582/java tcp6 0 0 oxgw01:57461 oxgw02:47115 ESTABLISHED 3582/java tcp6 0 0 oxgw01:57461 oxgw02:57348 ESTABLISHED 3582/java tcp6 0 0 oxgw01:57461 oxgw01:42589 ESTABLISHED 3582/java tcp6 0 0 oxgw01:43960 oxgw02:57462 ESTABLISHED 3582/java tcp6 0 0 oxgw01:41904 oxgw01:57462 ESTABLISHED 3582/java tcp6 0 0 oxgw01:42589 oxgw01:57461 ESTABLISHED 3706/java tcp6 0 0 oxgw01:43786 oxgw02:57461 ESTABLISHED 3706/java tcp6 0 0 oxgw01:35196 oxgw02:58849 ESTABLISHED 3706/java tcp6 0 0 oxgw01:57462 oxgw02:44548 ESTABLISHED 3706/java tcp6 0 0 oxgw01:57461 oxgw02:44893 ESTABLISHED 3582/java

How to verify those connections? The last line shows a process id (PID) of the local process that has an established connection. In this case, PID3706 is the Open-Xchange Groupware Daemon and PID3582 is the Open-Xchange Administration Daemon. These services build mesh connections between each groupware, each admindaemon and each foldercache service. Some connections are used bidirectionally so only one connection is visible, others use two connections (inbound and outbound) depending on the network responses. It is important that each service is connected to each other while the foldercache is only connected between two groupware services. It can take some time until all connections are established after Open-Xchange Server has been started. In this example, the first two lines indicate connections between the local groupware process and the remote admindaemon and groupware processes.

You also should install and configure the OXtender for Business Mobility:

exchange active sync configuration for Open-Xchange

Installation Steps depending on your environment - Instructions & Recommendations

The following components need to be implemented in your environment.

Implement Load Balancer

A load balancer in front of the OX servers is necessary for this deployment size. It needs to handle the requests if one OX server fails.

If you already have a hardware load balancing solution in place, this can be used. OX is known to work with the standard load balancing solutions from BigIP, Barracuda, Foundry, ...

If you do not have a load balancing solution already in place, we recommend to use Keepalived as reliable and cost effective solution.

Read more about configuring Keepalived for Open-Xchange

Connect Control Panel

You need a Control Panel to create and edit users.

OX is designed to integrate into every solution you may already run in your environment and also into wide spread solutions, like the Parallels Control Panels.

If you do not run hosting services today and do not have a Control Panel in place, it is recommend to use Plesk to manage OX. With that combination you will get a full functional hosting platform containing everything you need.

Integrate your own Control Panel

If you already have a Control Panel in production, you should integrate OX with it. It is recommended to use the SOAP provisioning Interface for that purpose.

Read more about: Provisioning using SOAP

A good start to test and to understand the necessary commands are the Command Line Tools. They have exactly the same calls like the SOAP API.

Read more about: Open-Xchange CLT

Integrate with Parallels Automation (POA)

Parallels Operations Automation (POA) is an operations support system (OSS) for service providers, who want to differentiate their offerings in order to reduce customer churn and attract new customers. Additional, the APS package adds a high performance, best in class email service to Parallels Plesk Panel customers.

Authentication

To avoid password synchronization issues, it is recommended to use your existing email authentication mechanism within OX. Then you do not need to add user passwords to OX, you simply use a plugin to authenticate against your IMAP server.

Read more about the IMAP Authentication Plugin

Connect Email System

Every email system providing IMAP and SMTP can be used as backend to OX. Best experiences are made with the widespread Linux based IMAP servers Dovecot, Cyrus or Courier.

Other IMAP servers need to be tested thoroughly before going into production.

There are several possibilities to implement the Email system:

- You already have an email system available: Nothing needs to be done, it just needs to be configured

- You use Parallels Automation (POA): Nothing special needs to be done, everything you need is contained in the APS package

- You want to setup a new Email system: It is recommended to use Dovecot, as this is very stable, fast, feature rich and easy to scale

Dovecot Setup

If you want to setup a new Email system based on Dovecot, it is recommended to use NFS as storage backend and to install at least two Dovecot servers, accessing this storage. With that setup you have best scalability and high availability with a minimum of complexity and hardware.

Read more in the Dovecot documentation including a QuickConfiguration guide

Recommended Optional Next Steps

You will find plenty of additional documentation for customization of OX in our knowledge base [1]

When the main setup is completed, we recommend to start with the following articles to enhance your system and to become more attractive for your users.

Automated Frontend Tests

It is a good idea, to verify the functionality of your freshly set up and integrated system. Our QA department does that with tests, running automatically on the web frontend. We release this tests with every release and recommend you to use them to verify your environment with every update.

Read more about Automated_GUI_Tests

Monitoring / Statistics

It is recommended to implement at least a minimal monitoring/Statistics solution to get an overview of the systems health. If you have a support contract with Open-Xchange, it is very helpful, if the support can access the monitoring graphs. There are example scripts for a basic monitoring with [Munin] available.

Read more about installing and configuring Munin scripts for Open-Xchange

Upsell Plugin / Webmail Replacement

If you want to use your OX based Webmail system to upsell premium functions like full groupware functionality or like push to mobile phones, it is strongly recommended to use the in-app sales process.

Read more about Upsell

Branding

If you want OX to look more like your own Corporate Identity, including your logo, product name and maybe your colors, this can be easily achieved by changing the logos and stylesheets.

Read more about: Gui_Theming_Description

Read more about: Gui Branding Plugins

Read more about: Branding via the ConfigCascade

Backup

It is recommended to run regular backups for your OX installation. This can be done with every backup solution for Linux.

Read more about Backup your Open-Xchange installation