AppSuite:Grizzly

Grizzly based backend

Up to OX App Suite we were limited to AJP based communication between the HTTP server and the OX backend server. Starting with the release 7.0.1 of OX App Suite, we offer a second HTTP based connector for the communication between the HTTP server and the backend. This new connector is based on Oracle's Project Grizzly - a NIO and Web framework. AJP is deprecated with 7.4 and will be discontinued with 7.6.0.

Packages

Next, we'll show some information on the packages needed to install a Grizzly based backend, give you some in depth information about the package dependencies of open-xchange on open-xchange-grizzly.

HttpService as packaging dependency

The open-xchange package depends on a virtual package called open-xchange-httpservice. This service is provided by the open-xchange-grizzly package.

Install on OX App Suite

Debian GNU/Linux 11.0

Add the following entry to /etc/apt/sources.list.d/open-xchange.list if not already present:

deb https://software.open-xchange.com/products/appsuite/stable/backend/DebianBullseye/ /

# if you have a valid maintenance subscription, please uncomment the

# following and add the ldb account data to the url so that the most recent

# packages get installed

# deb https://[CUSTOMERID:PASSWORD]@software.open-xchange.com/products/appsuite/stable/backend/updates/DebianBullseye/ /

and run

$ apt-get update $ apt-get install open-xchange-grizzly

Debian GNU/Linux 12.0

Add the following entry to /etc/apt/sources.list.d/open-xchange.list if not already present:

deb https://software.open-xchange.com/products/appsuite/stable/backend/DebianBookworm/ /

# if you have a valid maintenance subscription, please uncomment the

# following and add the ldb account data to the url so that the most recent

# packages get installed

# deb https://[CUSTOMERID:PASSWORD]@software.open-xchange.com/products/appsuite/stable/backend/updates/DebianBookworm/ /

and run

$ apt-get update $ apt-get install open-xchange-grizzly

Cluster setup

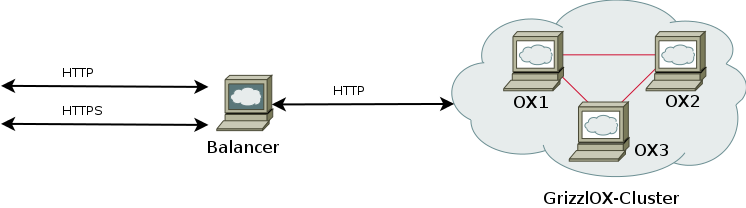

This picture shows our default cluster setup. It consists of a proxying balancer (in our case Apache) and a cluster of multiple OX App Suite backends. The balancer receives HTTP and/or HTTPS requests. It then decides which requests should be handled by itself and which should be forwarded, so they can be handled by one of the OX App Suite backends. That's where the AJP or HTTP connector is used.

Configuration

As requests pass the balancer first, we'll start with a look at how to configure the balancer before configuring the Grizzly-based OX App Suite backends.

Apache configuration

Now as the Open-Xchange Server has been set up and the database is running, we have to configure the Apache webserver and the mod_proxy_http module to access the groupware frontend.

$ a2enmod proxy proxy_http proxy_balancer expires deflate headers rewrite mime setenvif

If you used AJP before, you have to delete /etc/apache2/conf.d/proxy_ajp.conf.

Configure the mod_proxy_http module by creating a new Apache configuration file.

$ vim /etc/apache2/conf.d/proxy_http.conf

<IfModule mod_proxy_http.c>

ProxyRequests Off

ProxyStatus On

# When enabled, this option will pass the Host: line from the incoming request to the proxied host.

ProxyPreserveHost On

# Please note that the servlet path to the soap API has changed:

<Location /webservices>

# restrict access to the soap provisioning API

Order Deny,Allow

Deny from all

Allow from 127.0.0.1

# you might add more ip addresses / networks here

# Allow from 192.168 10 172.16

</Location>

# The old path is kept for compatibility reasons

<Location /servlet/axis2/services>

Order Deny,Allow

Deny from all

Allow from 127.0.0.1

</Location>

# Enable the balancer manager mentioned in

# https://oxpedia.org/wiki/index.php?title=AppSuite:Running_a_cluster#Updating_a_Cluster

<IfModule mod_status.c>

<Location /balancer-manager>

SetHandler balancer-manager

Order Deny,Allow

Deny from all

Allow from 127.0.0.1

</Location>

</IfModule>

<Proxy balancer://oxcluster>

Order deny,allow

Allow from all

# multiple server setups need to have the hostname inserted instead localhost

BalancerMember http://localhost:8009 timeout=100 smax=0 ttl=60 retry=60 loadfactor=50 route=APP1

# Enable and maybe add additional hosts running OX here

# BalancerMember http://oxhost2:8009 timeout=100 smax=0 ttl=60 retry=60 loadfactor=50 route=APP2

ProxySet stickysession=JSESSIONID|jsessionid scolonpathdelim=On

SetEnv proxy-initial-not-pooled

SetEnv proxy-sendchunked

</Proxy>

# The standalone documentconverter(s) within your setup (if installed)

# Make sure to restrict access to backends only

# See: http://httpd.apache.org/docs/$YOUR_VERSION/mod/mod_authz_host.html#allow for more infos

#<Proxy balancer://oxcluster_docs>

# Order Deny,Allow

# Deny from all

# Allow from backend1IP

# BalancerMember http://converter_host:8009 timeout=100 smax=0 ttl=60 retry=60 loadfactor=50 keepalive=On route=APP3

# ProxySet stickysession=JSESSIONID|jsessionid scolonpathdelim=On

# SetEnv proxy-initial-not-pooled

# SetEnv proxy-sendchunked

#</Proxy>

# Define another Proxy Container with different timeout for the sync clients. Microsoft recommends a minimum value of 15 minutes.

# Setting the value lower than the one defined as com.openexchange.usm.eas.ping.max_heartbeat in eas.properties will lead to connection

# timeouts for clients. See http://support.microsoft.com/?kbid=905013 for additional information.

#

# NOTE for Apache versions < 2.4:

# When using a single node system or using BalancerMembers that are assigned to other balancers please add a second hostname for that

# BalancerMember's IP so Apache can treat it as additional BalancerMember with a different timeout.

#

# Example from /etc/hosts: 127.0.0.1 localhost localhost_sync

#

# Alternatively select one or more hosts of your cluster to be restricted to handle only eas/usm requests

<Proxy balancer://oxcluster_sync>

Order deny,allow

Allow from all

# multiple server setups need to have the hostname inserted instead localhost

BalancerMember http://localhost_sync:8009 timeout=1900 smax=0 ttl=60 retry=60 loadfactor=50 route=APP1

# Enable and maybe add additional hosts running OX here

# BalancerMember http://oxhost2:8009 timeout=1900 smax=0 ttl=60 retry=60 loadfactor=50 route=APP2

ProxySet stickysession=JSESSIONID|jsessionid scolonpathdelim=On

SetEnv proxy-initial-not-pooled

SetEnv proxy-sendchunked

</Proxy>

# When specifying additional mappings via the ProxyPass directive be aware that the first matching rule wins. Overlapping urls of

# mappings have to be ordered from longest URL to shortest URL.

#

# Example:

# ProxyPass /ajax balancer://oxcluster_with_100s_timeout/ajax

# ProxyPass /ajax/test balancer://oxcluster_with_200s_timeout/ajax/test

#

# Requests to /ajax/test would have a timeout of 100s instead of 200s

#

# See:

# - http://httpd.apache.org/docs/current/mod/mod_proxy.html#proxypass Ordering ProxyPass Directives

# - http://httpd.apache.org/docs/current/mod/mod_proxy.html#workers Worker Sharing

ProxyPass /ajax balancer://oxcluster/ajax

ProxyPass /appsuite/api balancer://oxcluster/ajax

ProxyPass /drive balancer://oxcluster/drive

ProxyPass /infostore balancer://oxcluster/infostore

ProxyPass /realtime balancer://oxcluster/realtime

ProxyPass /servlet balancer://oxcluster/servlet

ProxyPass /webservices balancer://oxcluster/webservices

#ProxyPass /documentconverterws balancer://oxcluster_docs/documentconverterws

ProxyPass /usm-json balancer://oxcluster_sync/usm-json

ProxyPass /Microsoft-Server-ActiveSync balancer://oxcluster_sync/Microsoft-Server-ActiveSync

</IfModule>

Modify the default website settings to display the Open-Xchange GUI

$ vim /etc/apache2/sites-enabled/000-default

<VirtualHost *:80>

ServerAdmin webmaster@localhost

DocumentRoot /var/www/

<Directory /var/www/>

Options -Indexes +FollowSymLinks +MultiViews

AllowOverride None

Order allow,deny

allow from all

RedirectMatch ^/$ /appsuite/

</Directory>

<Directory /var/www//appsuite>

Options None +SymLinksIfOwnerMatch

AllowOverride Indexes FileInfo

</Directory>

</VirtualHost>

If you want to secure your Apache setup via HTTPS (which is highly recommended) or if you have proxies in front of your Apache please follow the instructions at:

- Grizzly configuration in general, and specifically:

- X-FORWARDED-PROTO Header

- X-FORWARDED-FOR Header

to properly instruct the backend about the security status of the connection and the remote IP used to contact the backend.

X-FORWARDED-PROTO Header

Appsuite:Grizzly#Cluster_setup shows that HTTPS communication is terminated by the Apache balancer in front of the OX backends. To let the backends know about the protocol that is used to communicate with the Apache server we have to set a special header in the ssl virtual hosts configurations in Apache to forward this information. The de facto standard for this is the X-Forwarded-Proto header.

To add it to your Apache configuration you have to open the default ssl vhost, for Debian systems do:

$ vim /etc/apache2/sites-enabled/default-ssl

For RHEL and CentOS, do:

$ vim/etc/httpd/conf.d/ssl.conf

and add

RequestHeader set X-Forwarded-Proto "https"

to each of the virtual hosts used to communicate with the OX App Suite backends.

Grizzly configuration

Available configuration files

/opt/open-xchange/etc/server.properties

This extract of the server.properties shows the properties that can be applied to the grizzly and ajp backend.

...

# DEFAULT ENCODING FOR INCOMING HTTP REQUESTS

# This value MUST be equal to web server's default encoding

DefaultEncoding=UTF-8

...

# The host for the connector's (ajp, http) network listener. Set to "*" if you

# want to listen on all available interfaces.

#Default value: 127.0.0.1, bind to localhost only.

com.openexchange.connector.networkListenerHost=*

# The default port for the connector's (ajp, http) network listener.

# Default value: 8009.

com.openexchange.connector.networkListenerPort=8009

# Specify the max. number of allowed request parameters for the connector (ajp, http)

# Default value: 30

com.openexchange.connector.maxRequestParameters: 30

# To enable proper load balancing and request routing from {client1, client2 ..

# .} --> balancer --> {backend1, backend2 ...} we have to append a backend route

# to the JSESSIONID cookies separated by a '.'. It's important that this backend

# route is unique for every single backend behind the load balancer.

# The string has to be a sequence of characters excluding semi-colon, comma and

# white space so the JSESSIONID cookie stays in accordance with the cookie

# specification after we append the backendroute to it.

# Default value: OX0

com.openexchange.server.backendRoute=OX1

# Decides if we should consider X-Forward-Headers that reach the backend.

# Those can be spoofed by clients so we have to make sure to consider the headers only if the proxy/proxies reliably override those

# headers for incoming requests.

# Default value: false

com.openexchange.server.considerXForwards = false

# The name of the protocolHeader used to identify the originating IP address of

# a client connecting to a web server through an HTTP proxy or load balancer.

# This is needed for grizzly based setups that make use of http proxying.

# If the header isn't found the first proxy in front of grizzly will be used

# as originating IP/remote address.

# Default value: X-Forwarded-For

com.openexchange.server.forHeader=X-Forwarded-For

# A list of know proxies in front of our httpserver/balancer as comma separated IPs e.g: 192.168.1.50, 192.168.1.51

com.openexchange.server.knownProxies =

...

/opt/open-xchange/etc/grizzly.properties

Next we'll look at the parts of the grizzly.properties that configure only the grizzly backend but not the ajp backend.

# Grizzly.properties # # This file configures the grizzly server contained in the package # open-xchange-grizzly. In your OX setup grizzly is located behind the # load-balancer and accepts incoming client requests. Communication with the # load balancer is done via http, e.g via Apache's mod_proxy_http. ### Push technology ################################################################################ # Comet is an umbrella term used to describe a technique allowing web browser to # receive almost real time updates from the server. The two most common # approaches are long polling and streaming. Long polling differs from streaming # in that each update from the server ultimately results in another follow up # request from the client. # Default value: true com.openexchange.http.grizzly.hasCometEnabled=true # Bi-directional, full-duplex communications channels over a single TCP # connection. # Default value: false com.openexchange.http.grizzly.hasWebSocketsEnabled=true ### JMX ################################################################################ # Do you want to enable grizzly monitoring via JMX? Default value: true. com.openexchange.http.grizzly.hasJMXEnabled=true ### Protocol ################################################################################ # Grizzly is able to communicate via AJP besides its default prototcol HTTP. # Do you want to use AJP instead of HTTP? # Default value: false com.openexchange.http.grizzly.hasAJPEnabled=false

/opt/open-xchange/etc/requestwatcher.properties

And finally the properties to configure the requestwatchers of the http and ajp backends

# Requestwatcher.properties # # This file configures the requestwatchers contained in the packages # open-xchange-grizzly. The requestwatcher keeps track of # incoming requests and periodically checks the age of the currently processing # requests. If a request exceeds the configured maximum age, infos about the # request and its processing thread are logged either into the configured # logfiles or syslog depending on your configuration. # Enable/disable the requestwatcher. # Default value: true (enabled). com.openexchange.requestwatcher.isEnabled: true # Define the requestwatcher's frequency in milliseconds. # Default value: 30000. com.openexchange.requestwatcher.frequency: 30000 # Define the maximum allowed age of requests in milliseconds. # Default value: 60000. com.openexchange.requestwatcher.maxRequestAge: 60000 # Permission to stop & re-init system (works only for the ajp connector) com.openexchange.requestwatcher.restartPermission: false

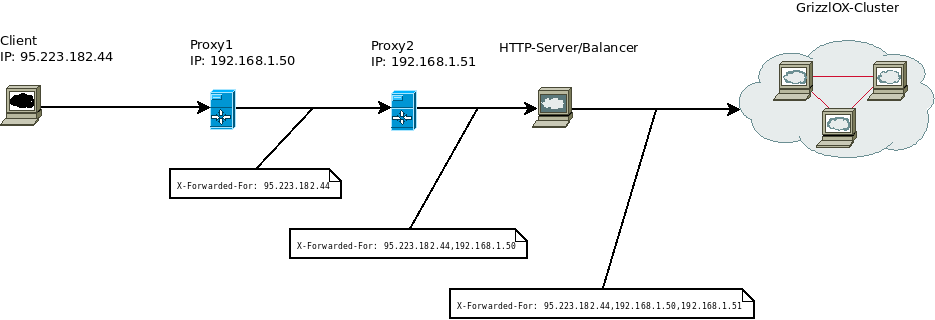

Multiple Proxies in front of the cluster

X-FORWARDED-FOR Header

Wikipedia[1] describes the X-Forwarded-For Header as:

The X-Forwarded-For (XFF) HTTP header field is a de facto standard for identifying the originating IP address of a client connecting to a web server through an HTTP proxy or load balancer. This is an HTTP request header which was introduced by the Squid caching proxy server's developers. An effort has been started at IETF for standardizing the Forwarded HTTP header.

In this context, the caching servers are most often those of large ISPs who either encourage or force their users to use proxy servers for access to the World Wide Web, something which is often done to reduce external bandwidth through caching. In some cases, these proxy servers are transparent proxies, and the user may be unaware that they are using them.

Without the use of XFF or another similar technique, any connection through the proxy would reveal only the originating IP address of the proxy server, effectively turning the proxy server into an anonymizing service, thus making the detection and prevention of abusive accesses significantly harder than if the originating IP address was available. The usefulness of XFF depends on the proxy server truthfully reporting the original host's IP address; for this reason, effective use of XFF requires knowledge of which proxies are trustworthy, for instance by looking them up in a whitelist of servers whose maintainers can be trusted.

Format

The general format of the field is:

- X-Forwarded-For: client, proxy1, proxy2

where the value is a comma+space separated list of IP addresses, the left-most being the original client, and each successive proxy that passed the request adding the IP address where it received the request from. In this example, the request passed through proxy1, proxy2, and then proxy3 (not shown in the header). proxy3 appears as remote address of the request.

The given information should be used with care since it is easy to forge an X-Forwarded-For field. The last IP address is always the IP address that connects to the last proxy, which means it is the most reliable source of information. X-Forwarded-For data can be used in a forward or reverse proxy scenario.

Just logging the X-Forwarded-For field is not always enough: The last proxy IP address in a chain is not contained within the X-Forwarded-For field, it is in the actual IP header. A web server should log BOTH the request's source IP address and the X-Forwarded-For field information for completeness.

Getting the remote IP

If you want the backends in the GrizzlOX-Cluster to use Client's(95.223.182.44) instead of Balancer's IP as remote address for e.g reporting the LogProperty com.openexchange.grizzly.remoteAddress for faulty requests in the Open-Xchange logs, you have to change these parameters from their default values seen in /opt/open-xchange/etc/server.properties to:

# Decides if we should consider X-Forward-Headers that reach the backend. # Those can be spoofed by clients so we have to make sure to consider the headers only if the proxy/proxies reliably override those # headers for incoming requests. # Default value: false com.openexchange.server.considerXForwards = true

# The name of the protocolHeader used to identify the originating IP address of # a client connecting to a web server through an HTTP proxy or load balancer. # This is needed for grizzly based setups that make use of http proxying. # If the header isn't found the first proxy in front of grizzly will be used # as originating IP/remote address. # Default value: X-Forwarded-For com.openexchange.server.forHeader=X-Forwarded-For

# A list of know proxies in front of our httpserver/balancer as comma separated IPs e.g: 192.168.1.50, 192.168.1.51 com.openexchange.server.knownProxies = 192.168.1.50, 192.168.1.51

After you have changed the the configuration you have to restart the backend server via

$ /etc/init.d/open-xchange restart

The remote IP is detected in the following way: All configured known proxies are removed from the list of IPs listed in the X-FORWARDED-FOR header, beginning frome the right side of the list. The rightmost leftover IP is then seen as our new remote address as it represents the first IP not known to us.

Make Grizzly speak AJP instead of HTTP

Grizzly normally acts as an HTTP server and demands that the balancing proxy in front of it communicates via HTTP. This behaviour can be changed to Grizzly accepting the Apache JServ Protocol by simply adjusting the Grizzly and Apache configurations and restarting both servers.

Grizzly.properties

Change this parameter from the value seen in Grizzly configuration to:

### Protocol ################################################################################ # Grizzly is able to communicate via AJP besides its default prototcol HTTP. # Do you want to use AJP instead of HTTP? # Default value: false com.openexchange.http.grizzly.hasAJPEnabled=true

Apache configuration

Change the HTTP related parameters from the values seen in Apache configuration to AJP. This involves the IfModule directive and the BalancerMember declarations.

To make Apach communicate via AJP instead of HTTP you have to replace the proxy_http with the proxy_ajp module.

<IfModule mod_proxy_ajp.c>

ProxyRequests Off

# When enabled, this option will pass the Host: line from the incoming request to the proxied host.

ProxyPreserveHost On

<Proxy balancer://oxcluster>

Order deny,allow

Allow from all

# multiple server setups need to have the hostname inserted instead localhost

BalancerMember ajp://localhost:8009 timeout=100 smax=0 ttl=60 retry=60 loadfactor=50 route=OX1

# Enable and maybe add additional hosts running OX here

# BalancerMember ajp://oxhost2:8009 timeout=100 smax=0 ttl=60 retry=60 loadfactor=50 route=OX2

ProxySet stickysession=JSESSIONID|jsessionid scolonpathdelim=On

</Proxy>

# Microsoft recommends a minimum timeout value of 15 minutes for eas connections

<Proxy balancer://eas_oxcluster>

Order deny,allow

Allow from all

# multiple server setups need to have the hostname inserted instead localhost

BalancerMember ajp://localhost:8009 timeout=1800 smax=0 ttl=60 retry=60 loadfactor=50 route=OX1

# Enable and maybe add additional hosts running OX here

# BalancerMember ajp://oxhost2:8009 timeout=1800 smax=0 ttl=60 retry=60 loadfactor=50 route=OX2

ProxySet stickysession=JSESSIONID|jsessionid scolonpathdelim=On

</Proxy>

$ a2dismod proxy_http && a2enmod proxy_ajp

Restart the servers

$ /etc/init.d/open-xchange restart $ /etc/init.d/apache2 restart

Servlet Filters

From version 7.6.1 on you are able to register your own servlet filters when using a Grizzly powered OX backend.

This is realized via a ServletFilterTracker that tracks services of type javax.servlet.Filter and updates the registered ServletHandlers so that the filters are applied to new incoming requests/outgoing responses.

Filter Service Properties

Your filter service may be registered with additional com.openexchange.servlet.Constants.FILTER_PATHS and org.osgi.framework.Constants.SERVICE_RANKING properties.

filter.paths

This property may consist of path expressions including wildcards. The path property should be provided as:

- A single String for a single path

- An array of Strings

- A Collection of of Objects that provides the path via invocation of toString()

If the filter.path property is missing/null the filter will be used for every incoming request.

The form of a path must be one of:

- * : This filter will be applied to all request

- The path starts with / and ends with the /* wildcard but doesn't equal /* e.g. /a/b/* : This filter will be used for requests to all URLs starting with /a/b e.g /a/b/c, /a/b/c/d and so on

- The path starts with / but doesn't end with the /* wildcard : This filter will only be used for requests that match this path exactly

service.ranking

The filters you register are combined into a filterchain and ordered by this service ranking that has to be specified as an integer value. Incoming Requests will then be processed by the filterchain. If multiple filters have the same service ranking their order within the filterchain is as follows:

- filters that match all paths e.g. were registered without a path or with the /* wildcard

- filters that match a prefix of a path e.g. filters that are registered for /a/b/* should be used for requests to /a/b/c, too

- filters that match a path e.g. filters that are registered for /a/b should be used only for requests to /a/b

It's recommended to use unique ranking values for each servlet filter to achieve a reliable filterchain setup.

Example

public class ServletFilterActivator extends HousekeepingActivator {

@Override

protected Class[] getNeededServices() {

// It is important to await the HttpService before filter registration takes place!

return new Class[] { HttpService.class };

}

@Override

protected void startBundle() throws Exception {

Filter yourFilter = new Filter() {

@Override

public void destroy() {

// Nothing to do

}

@Override

public void doFilter(ServletRequest request, ServletResponse response, FilterChain filterChain)

throws IOException, ServletException {

String world = request.getParameter("hello");

filterChain.doFilter(request, response);

}

@Override

public void init(FilterConfig config) throws ServletException {

// Nothing to do

}

};

Dictionary<String, Object> serviceProperties = new Hashtable<String, Object>(2);

serviceProperties.put(Constants.SERVICE_RANKING, Integer.valueOf(0));

serviceProperties.put(FILTER_PATHS, "*");

registerService(Filter.class, yourFilter, serviceProperties);

}